I've Built GPT from Scratch Using YT Video and Claude Code

Without Coding Under 1 Hour

I wanted to understand how GPT works. Not the theory. The real thing.

So I built one from scratch.

No coding experience needed.

Just Claude Code and a YouTube video.

Here’s what happened.

The Video That Started It All

Andrej Karpathy is the former Director of AI at Tesla.

Before that, he was at OpenAI.

He made a 2-hour video called “Let’s build GPT from scratch.”

I watched it. Understood some of it. The structure was dense.

The code was complicated.

But I had Claude Code.

Step 1: Building The Structure

First, I created a folder, named GPT.

Here I have two files;

Transcript

Resources

The transcript includes the YT script of the Andrej’s video.

The resource includes the links and everything Andrej puts in the description of this video.

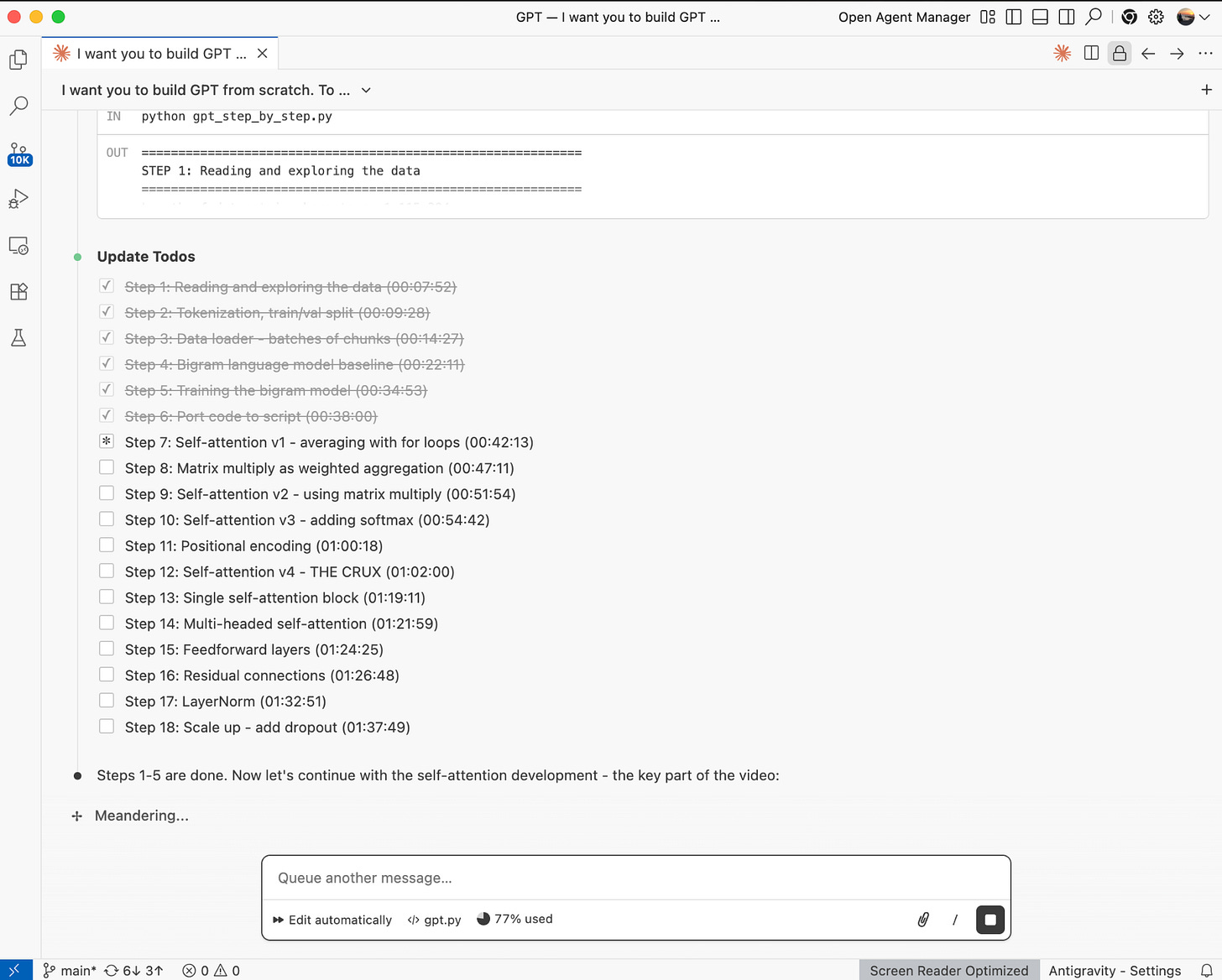

Step 2: Claude Code

Now my idea starts with a curiosity. I don’t think I can do it.

Even my initial prompts shows this.

I want you to build GPT from scratch.

To do that, follow the transcript.

Also check resources inside if needed.

Can we do this together?Let’s see.

It has started doing this.

Time elapsed: 5 minutes.

I didn’t write a single line of code.

Step 3: The Training Problem

I hit run on my MacBook.

Bad idea.

The fan started screaming. The laptop got hot. Progress bar stuck at 0%.

Training a neural network needs GPU power. My laptop has none.

I stopped the process. My computer cooled down.

New plan: Google Colab.

Step 4: Google Colab and the T4 GPU

Google gives you free GPU access. You just need a Google account.

I opened Colab. Selected T4 GPU from the runtime menu. Uploaded the training notebook.

Hit run.

The progress bar moved. 1%. 5%. 10%.

I watched it climb. 25%. 40%. 59%.

The training data was Shakespeare. All of it. Every play. Every sonnet. 1.1 megabytes of text.

The model was learning patterns. Word by word. Character by character.

51 minutes later, training finished. Loss dropped from 4.2 to 1.4.

I had a working GPT model.

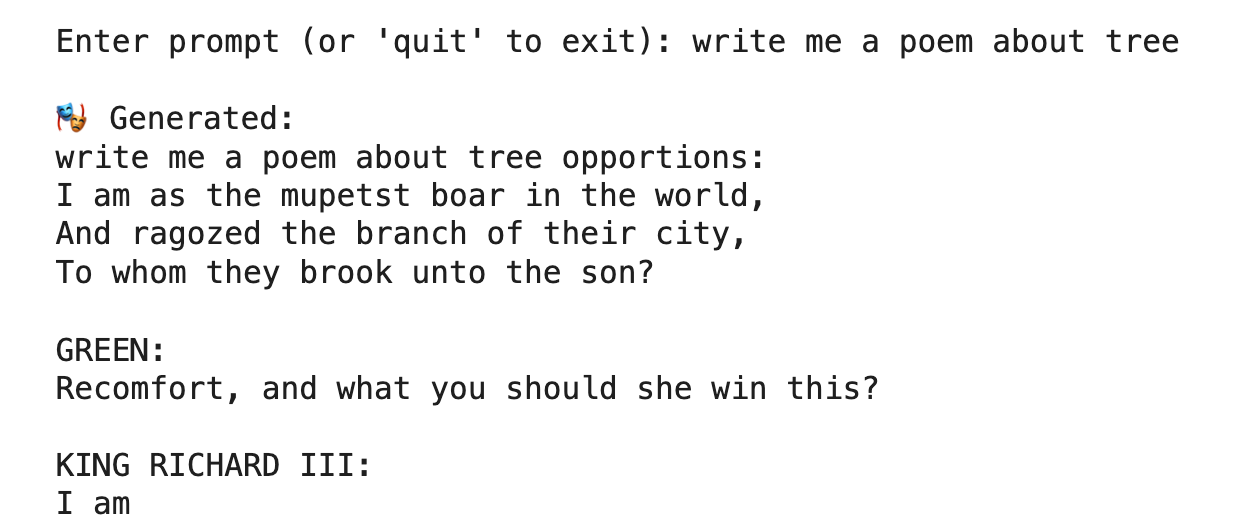

I wrote a prompt “Write me a poem about tree” and here is the result.

It looks good but running on Colab is not practical.

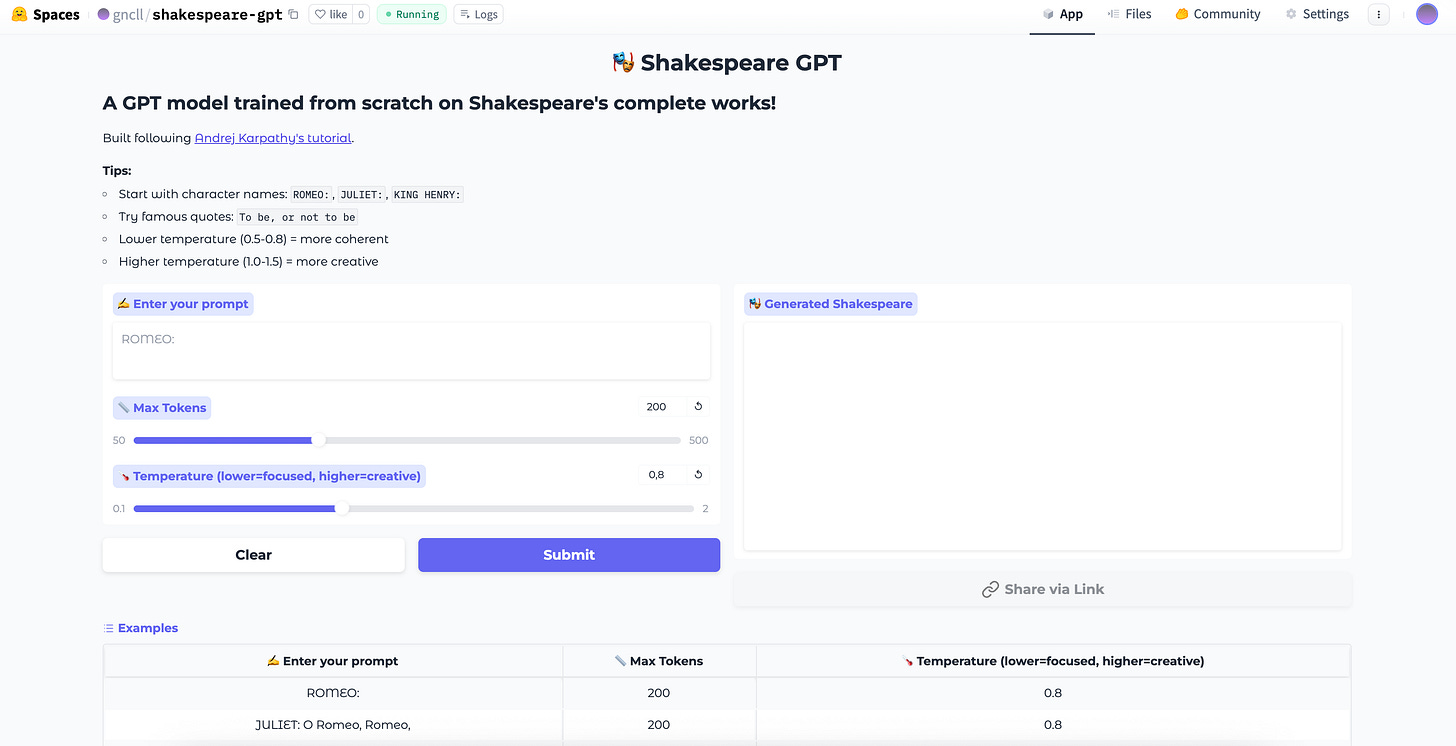

Step 5: Deploying to the Web

A model on my laptop is useless. I wanted anyone to try it.

Hugging Face offers free hosting for AI models.

You upload three files.

They handle the rest.

File 1: The app code (Gradio interface)

File 2: Requirements (PyTorch and Gradio)

File 3: The trained model weights (43MB)

I uploaded everything. Hit deploy.

Two minutes later, it was live.

Try it yourself: https://huggingface.co/spaces/gncll/shakespeare-gpt

Why Does It Talk Like Shakespeare?

Because that’s all it knows.

The model learned from Shakespeare’s plays. Nothing else. So it generates Shakespeare-style text.

Ask it about Python. It gives you Shakespeare.

Ask it about weather. Shakespeare.

Ask it anything. Shakespeare.

This is important to understand.

GPT models are pattern machines. They learn from data. They reproduce patterns from that data.

ChatGPT talks like a helpful assistant because it trained on helpful assistant conversations.

My model talks like Shakespeare because it trained on Shakespeare.

Same architecture. Different data. Different output.

What Would It Take to Build ChatGPT?

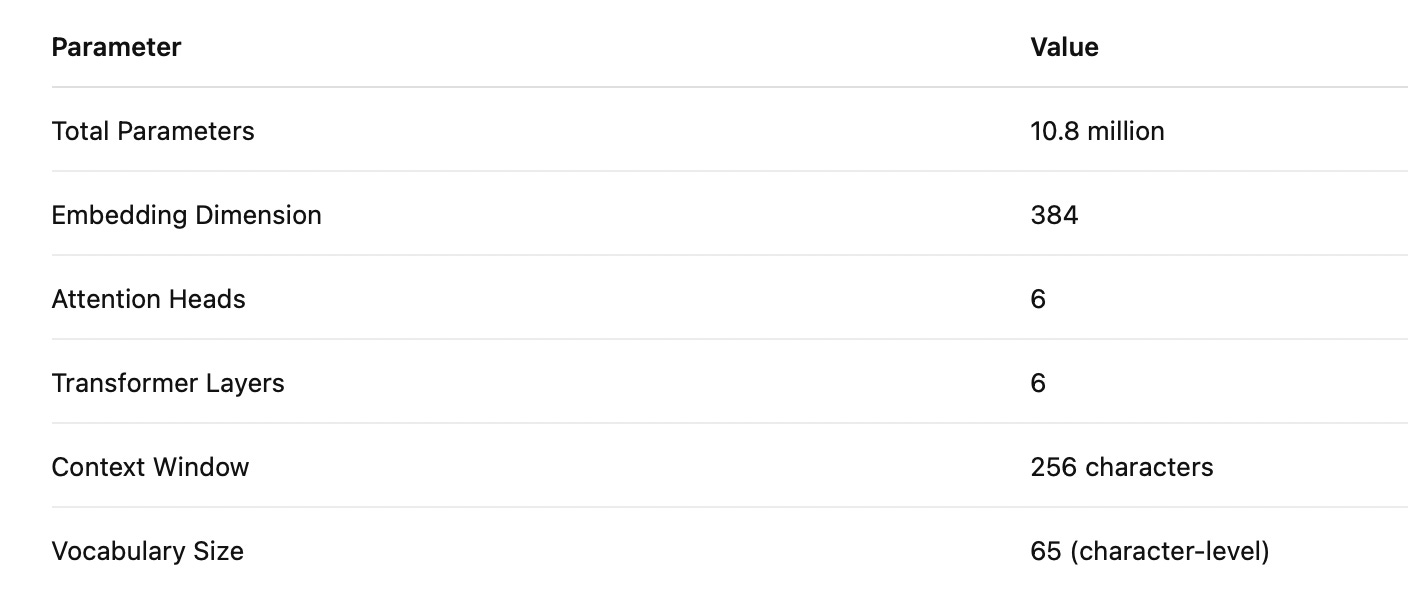

My model: 10.8 million parameters.

GPT-3: 175 billion parameters.

GPT-4: Estimated 1 trillion+ parameters.

My training data: 1.1 MB of Shakespeare.

ChatGPT training data: Trillions of tokens from the internet.

My training time: 51 minutes on a free GPU.

GPT-4 training time: Months on thousands of GPUs.

My cost: $0.

GPT-4 estimated cost: $100 million+.

The architecture is the same. The scale is different.

Andrej Karpathy made this video to show that the fundamentals are simple. Anyone can understand them.

The magic is in the scale.

The Model Specs

What I Learned?

1. Claude Code is fast.

5 minutes to write a complete transformer implementation.

Following a 2-hour video. No errors.

2. Local training doesn’t work.

Your laptop can’t train neural networks.

Use cloud GPUs.

Colab is free.

3. The basics are accessible.

The same attention mechanism in my toy model powers GPT-4.

Same math. Similar code structure.

4. Deployment is easy.

Hugging Face Spaces. Three files. Two minutes. Free hosting.

5. Data shapes output.

Shakespeare in, Shakespeare out. The model is a mirror of its training data.

Try It Yourself

The model is live. Type anything. Get Shakespeare.

Link: https://huggingface.co/spaces/gncll/shakespeare-gpt

What’s Next?

This shows me that I can do almost anything with the same structure and basic knowledge.

Just remember to double-check what you build.

You can use AI for verification too, but one final human review always reduces possible errors.