AI Hallucination Fix: 5 Methods That Reduce Errors 90%

AI hallucination cost a lawyer his case. Researchers at Google, Meta, and DeepMind solved it. Here are 5 copy-paste prompts that reduce AI errors by up to 90%.

A lawyer used ChatGPT for legal research. The AI cited six court cases. None of them existed. He was sanctioned by the court.

This is what AI hallucination looks like in real life. Confident. Convincing. Completely wrong.

But here’s what most people don’t know: researchers at Google, Meta, Cambridge, and DeepMind have already solved this problem. They published methods that reduce hallucinations by up to 90%.

The problem? These papers are buried in academic journals.

Nobody reads them.

I did. And I turned them into prompts you can copy and paste today.

1. What Is AI Hallucination?

AI hallucination is when a language model generates information that sounds correct but is completely fabricated.

The AI doesn’t “lie” intentionally.

It predicts the most likely next word based on patterns. When it doesn’t have real information, it fills the gap with plausible-sounding fiction(hallucination).

Common hallucinations:

- Fake citations: Papers, court cases, or studies that don’t exist

- Wrong facts: Incorrect dates, numbers, or names stated with confidence

- Invented details: Fabricated quotes, events, or relationships

- Mixed information:Combining real facts into false conclusions

2. Why Do AI Models Hallucinate?

Three core reasons:

a. Training to predict, not verify.

LLMs are trained to predict the next most probable word. They optimize for fluency, not accuracy.

A confident wrong answer scores higher than an uncertain correct one.

b. Knowledge gaps

The model encounters questions outside its training data.

Instead of saying “I don’t know,” it generates plausible-sounding text to fill the gap.

c. Pattern over-matching

The model sees patterns and extends them incorrectly.

If it knows “Einstein won the Nobel Prize,” it might hallucinate that “Einstein won the Nobel Prize for relativity” (he didn’t, it was for the photoelectric effect).

3. How can you reduce AI hallucinations With Simple Prompts?

Before we get to the advanced methods, here are basic techniques anyone can use:

Ask the AI to cite sources:

Answer my question and cite your sources. If you’re not sure, say “I’m not certain.Give permission to say “I don’t know

If you don’t have accurate information, say “I don’t know” instead of guessing.Request confidence levels:

Answer this question and rate your confidence (high/medium/low) for each claim.Use specific, narrow questions:

Instead of “Tell me about World War 2,” ask “What date did D-Day occur?”4. What tools to prevent AI hallucination?

Some AI tools are designed to be hallucination-resistant because they only answer from verified sources:

a. NotebookLM (Google)

NotebookLM only answers from documents you upload. It doesn’t use external knowledge. If the answer isn’t in your sources, it says so.

It is more than Learning tool by the way, I wrote an article about it where I’ve built entire products using it.

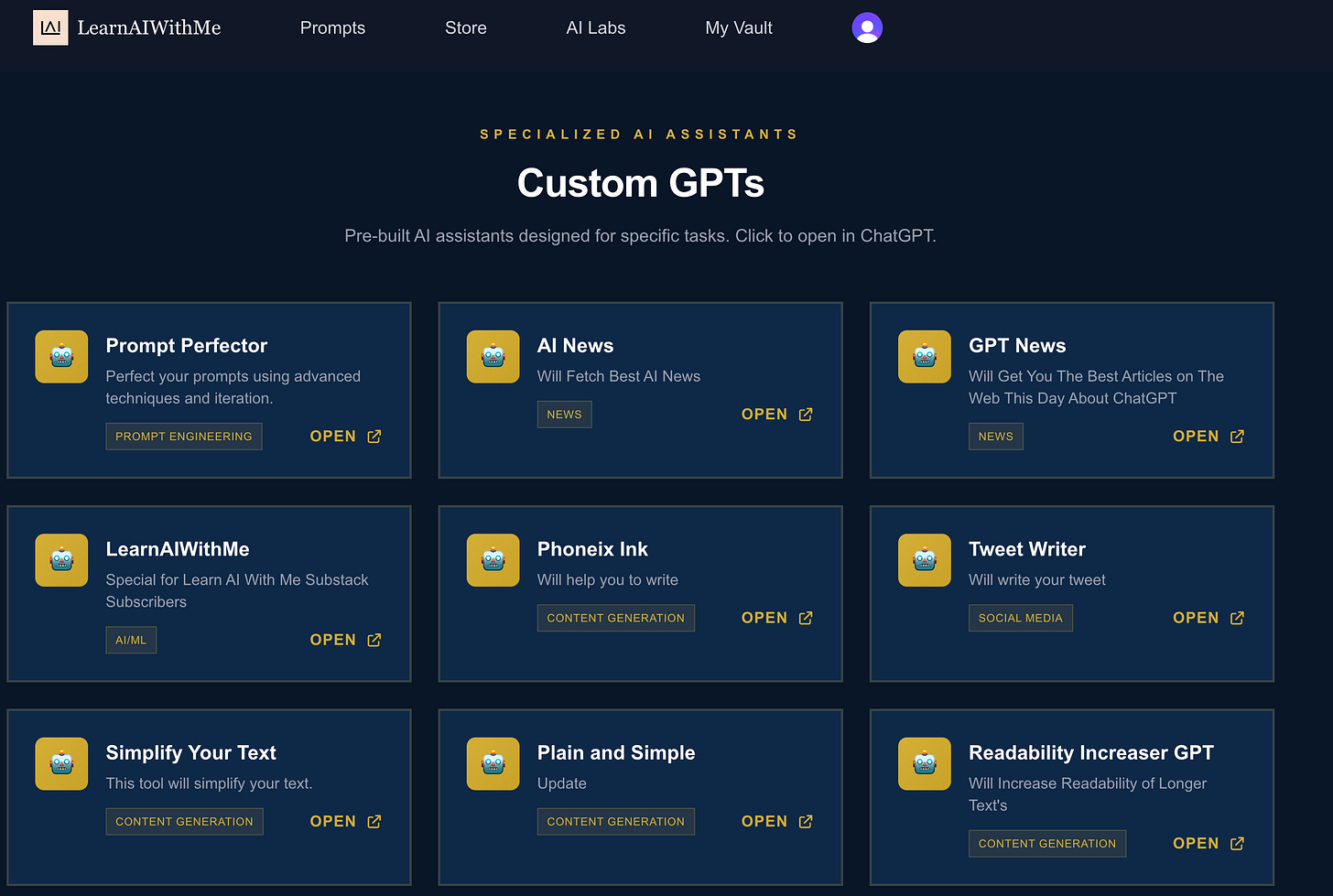

b. Custom GPTs (OpenAI)

In vault store, we’ve 10+ customGPT’s. Create a GPT with your own knowledge base. It answers primarily from your uploaded files.

I’ve created customGPT, named Jarvis to helps us chasing academic papers faster, in this one.

c. Gemini Gems (Google)

Similar to Custom GPTs. Create a Gem with specific instructions and documents. I’ve created Gems in this one to make my AI honest.

d. Perplexity AI

Searches the web in real-time and cites every claim with a source link.

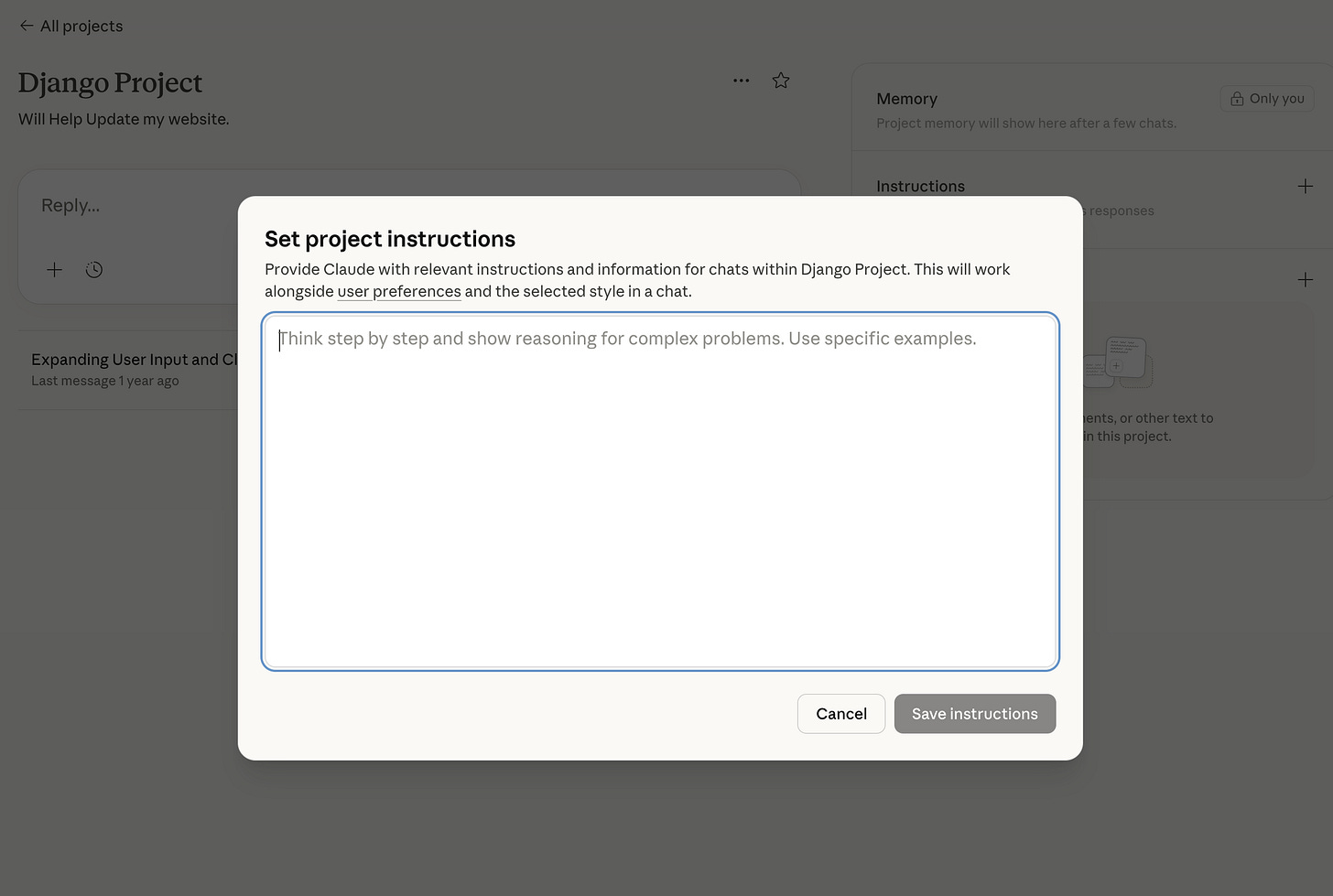

e. Claude with Projects (Anthropic)

Upload documents to a Project. Claude prioritizes that content when answering.

You can adjust the instructions of the project to make it less prone to hallucination.

5. The Vault Prompt: What Is the Reality Filter Prompt?

In vault, there are 600+ prompts waiting for you to customize/test or open in ChatGPT.

Let’s try finding a prompt about hallucination.

After becoming a paid sub on Substack with the inner circle subscription type, sign-up to vault with the same email address and reach the content.

This is from our Vault, a system prompt that forces AI to label anything it can’t verify.

Use this as a system prompt or paste it before any question where accuracy matters.

6. The Science: 5 Methods From Top AI Labs

The techniques above are good. But researchers at Meta, Google, DeepMind, Princeton, and Cambridge developed methods that are scientifically proven to reduce hallucinations dramatically.

Each upcoming method includes:

- How it works (the science)

- Real examples from the original papers

- Copy-paste prompt templates

The prompts also will be added to the vault, which is a platform, available for paid subscribers on Substack.